Setup kubernetes multi-node cluster on AWS

There are a number of ways to setup k8s cluster either on your workstation or VM or cloud. Even major cloud providers also give k8s cluster as a service.

To setup k8s multi node cluster I am using AWS EC2 instance one master and one worker node with below specifications:

2 EC2 instances

Amazon Linux 2 AMI 64-bit (x86)

Instance Type:- t2.micro 1vCPUs, 1GiB

Security group:- enable all traffic. (not a recommended approach but to keep things simple )

Amazon Linux 2 AMI 64-bit (x86)

Instance Type:- t2.micro 1vCPUs, 1GiB

Security group:- enable all traffic. (not a recommended approach but to keep things simple )

First install docker as k8s needs a container engine to launch containers.

sudo su - root

yum install docker -y

Start docker service and enable it to restart automatically after system reboot.

systemctl enable docker --now

To install kubeadm (main tool to install k8s multi node cluster) we have to configure yum repository for kubernetes as below

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

yum repolist

Now install kubeadm tool which will also install kubectl and kubelet tools as its dependencies.

yum install kubeadm --disableexcludes=kubernetes -yMake kubelet service up and enabled

systemctl enable kubelet --now

systemctl status kubelet

Kubelet service will be in activating status as it is waiting for kubernetes to install

K8s requires systemd as cgroup driver but docker by default uses cgroupfs. So we have to ask docker to use systemd instead. We can define this in docker config file then restart docker service.

cat <<EOF | tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart dockerK8s runs all its control-plane components as a pod on the master node. So we need to download corresponding container images.

docker image ls # need to run only in master node

kubeadm config images pull # need to run only in master node

docker image ls

k8s need tc (traffic controller) tool to manage k8s network. We need to install tc tool

yum install iproute-tc -y

kubeadm internally creates bridge network for pod communication. So in iptables bridge should be enabled.

sysctl -a | grep net.bridge.bridge-nf-call

If above two are not present then set it manually using below commands

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

Run all the above commands on all master and worker nodes.

K8s prerequisite is to have minimum 2 CPUs and 2 GB RAM, but since I am using EC2 t2.micro instance type with only 1CPU and 1 GB RAM so we have to ask kubeadm tool to ignore this prerequisite.

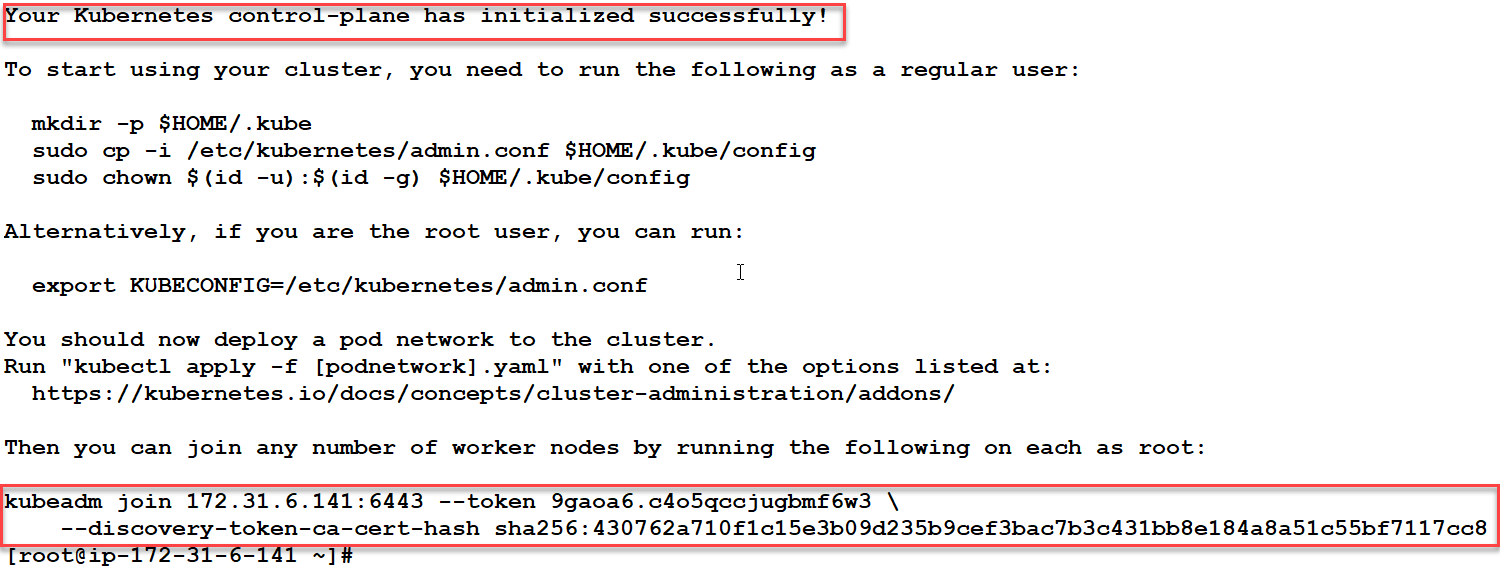

We can now run kubeadm tool which will setup our master node

kubeadm init --pod-network-cidr=10.240.0.0/16 \

--ignore-preflight-errors=NumCPU --ignore-preflight-errors=Mem --node-name=master

Now if we see kubelet status, it is active (running)

To use master node to run pods, we have to tell kubectl the address of the API server.

mkdir -p $HOME/.kube/

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

We can save this kubeadm join command or we can generate this by below command on master node.

kubeadm token create --print-join-commandNow we can see all nodes come up with STATUS as NotReady

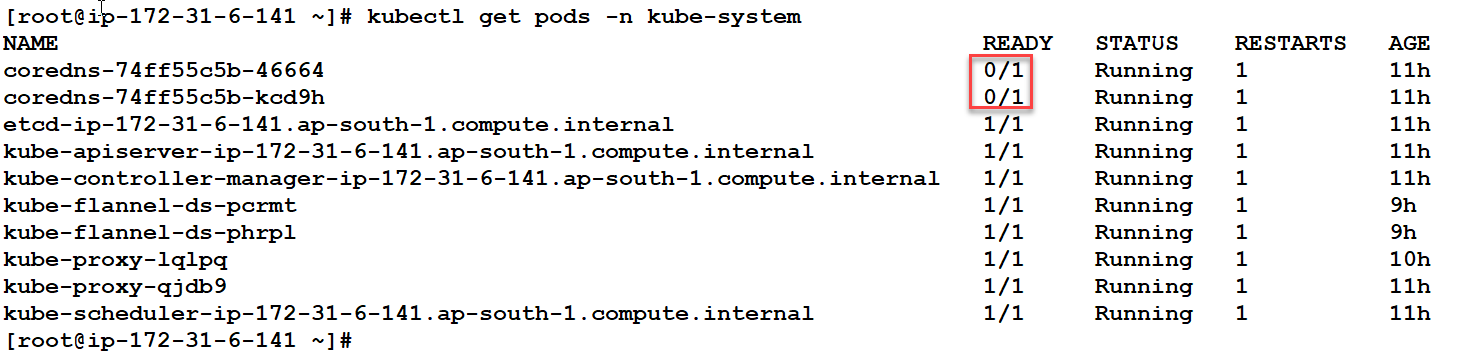

To make this node ready we need a CNI (Container Network Initiative) driver that completes the k8s networking like flannel.kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlTo make coredns pod also up we need to change the conf file of flannel to the network cidr that we have provided during kubeadm init command

kubectl get configmap -n kube-system

kubectl edit cm kube-flannel-cfg -n kube-system

kubectl delete pods -l app=flannel -n kube-system

Now we can see our control plane components are running

To verify our k8s setup,create a deployment with httpd image.

kubectl create deploy test-deploy --image=httpd

kubectl get deploy

kubectl get pods

Expose the deployment to access it from internet

kubectl expose deploy test-deploy --port=80 --type=NodePort

Finally using the EC2 instance public ip of any master or worker node we can access httpd server from browser.

Happy Learning 😄

Comments

Post a Comment